5 Types of AI Agents Explained: A Practical Guide for AI Development Services

As artificial intelligence continues to reshape how businesses operate, organizations are increasingly turning to AI development services to build systems that are not only intelligent, but also autonomous, scalable, and adaptive. At the heart of these systems are AI agents—core components that enable software to perceive environments, make decisions, and act toward defined objectives.

For enterprises planning AI-powered products, automation platforms, or data-driven decision systems, understanding AI agent types is not a theoretical exercise. It is a practical foundation for choosing the right architecture, controlling complexity, and maximizing long-term ROI. Below, we explore the five fundamental types of AI agents, highlighting their key features, typical use cases, and how they are applied in professional AI development services.

What Is an AI Agent?

An AI agent is a software entity designed to operate within an environment by continuously following a cycle of perception, decision-making, and action. Unlike traditional software programs that execute fixed instructions, AI agents can react dynamically, reason about outcomes, and—in more advanced cases—learn from experience.

In modern AI development services, agent-based systems are widely used to support automation, intelligent workflows, predictive analytics, robotics, and conversational AI, enabling businesses to move beyond static logic toward adaptive intelligence.

Read more: AI Agents Explained: The Complete Guide For Businesses

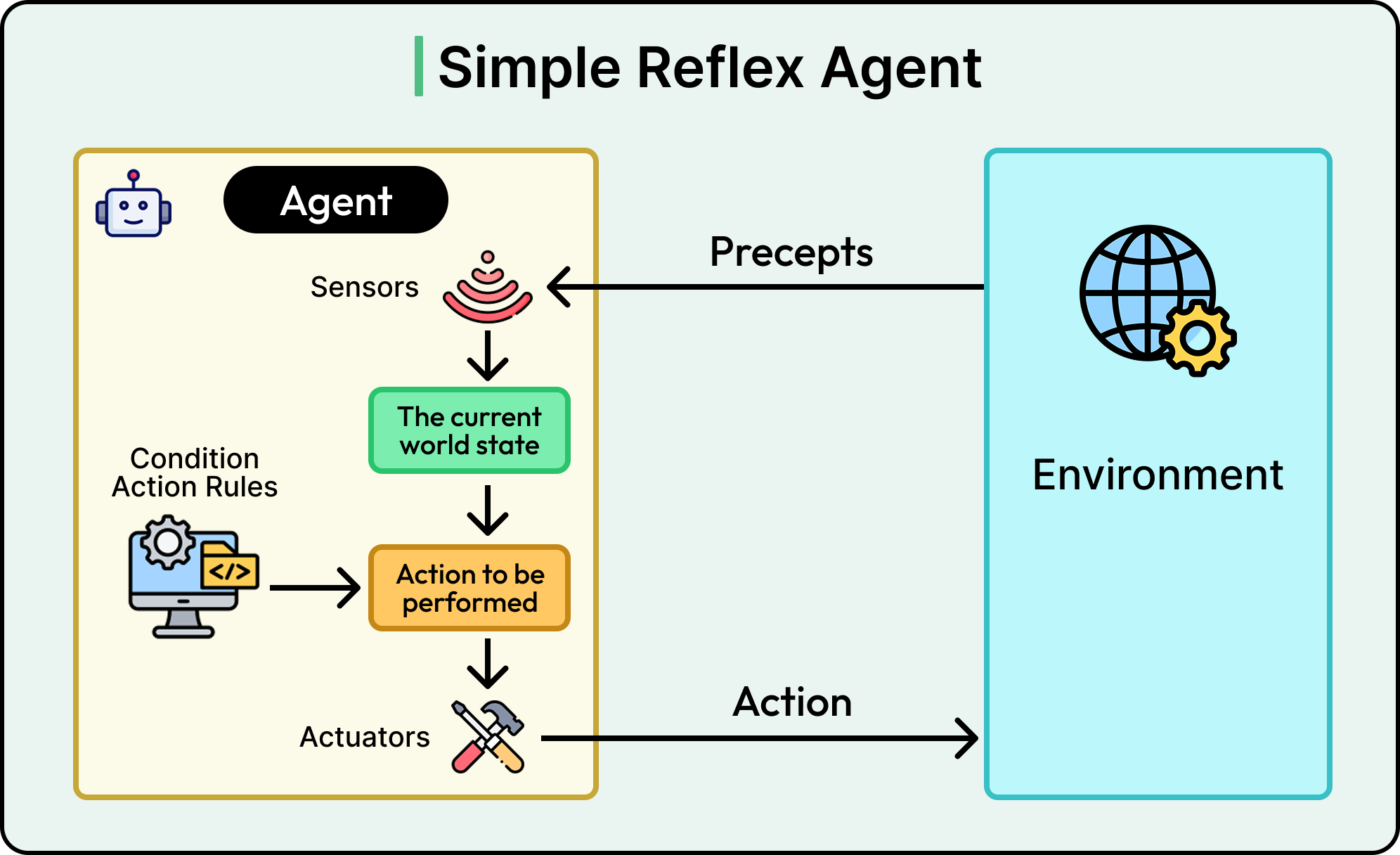

1. Simple Reflex Agents

Simple reflex agents represent the most basic form of intelligent behavior. They operate by mapping specific conditions directly to predefined actions, without retaining any memory of past states or anticipating future outcomes.

Key Features

At this level, intelligence is driven purely by rules. The agent reacts instantly to input signals, making it efficient and predictable but limited in flexibility.

- Operate on condition–action rules

- Respond only to the current environment state

- No memory or learning capability

- Fast execution with minimal computational cost

Common Use Cases

Because of their simplicity, these agents are well-suited for stable, highly controlled environments.

- Rule-based automation

- Threshold monitoring and alert systems

- Basic control mechanisms (e.g., temperature or system status checks)

Role in AI Development Services

AI development services often use simple reflex agents for lightweight automation, proof-of-concept implementations, or scenarios where reliability and speed matter more than adaptability.

2. Model-Based Reflex Agents

To overcome the limitations of purely reactive systems, model-based reflex agents introduce an internal representation of the environment. This allows them to make more informed decisions, even when data is incomplete.

Key Features

These agents maintain context by tracking changes over time, enabling more robust responses in dynamic or partially observable environments.

- Maintain an internal model of the environment

- Infer missing or hidden information

- Respond to changes based on current and past states

- More resilient than simple reflex agents

Common Use Cases

Model-based reflex agents are commonly applied where environmental awareness is essential.

- Smart home and IoT platforms

- Robotics navigation systems

- Industrial monitoring and diagnostics

Role in AI Development Services

In AI development services, model-based agents are frequently used for context-aware systems, especially in IoT, embedded AI, and robotics solutions where sensor reliability can vary.

3. Goal-Based Agents

Goal-based agents move beyond reactive behavior by explicitly considering future outcomes. Their decisions are guided by defined objectives, allowing them to evaluate different action paths before selecting the most suitable one.

Key Features

These agents rely on planning and search techniques to align actions with desired results.

- Operate based on clearly defined goals

- Evaluate possible future states

- Use planning and decision-making algorithms

- Greater flexibility than reflex-based agents

Common Use Cases

Goal-based reasoning is essential in scenarios where achieving an outcome matters more than following fixed rules.

- Autonomous navigation and route planning

- Workflow orchestration and task management

- Game AI and strategic simulations

Role in AI Development Services

AI development services leverage goal-based agents for intelligent planning systems, enabling businesses to automate complex processes while maintaining alignment with operational objectives.

4. Utility-Based Agents

While goal-based agents focus on achieving an objective, utility-based agents go a step further by optimizing how well that objective is achieved. They assess multiple possible outcomes and select the one that delivers the highest overall value.

Key Features

Decision-making is driven by a utility function that quantifies preferences, trade-offs, and uncertainty.

- Evaluate outcomes using a utility function

- Balance competing objectives

- Support probabilistic and optimization-based reasoning

- Deliver higher-quality decisions under uncertainty

Common Use Cases

Utility-based agents are widely used in data-intensive, optimization-driven applications.

- Recommendation engines

- Dynamic pricing and revenue optimization

- Supply chain and logistics planning

- Financial and risk analysis systems

Role in AI Development Services

For advanced decision-support systems, AI development services rely on utility-based agents to help businesses optimize performance, cost, and user experience simultaneously.

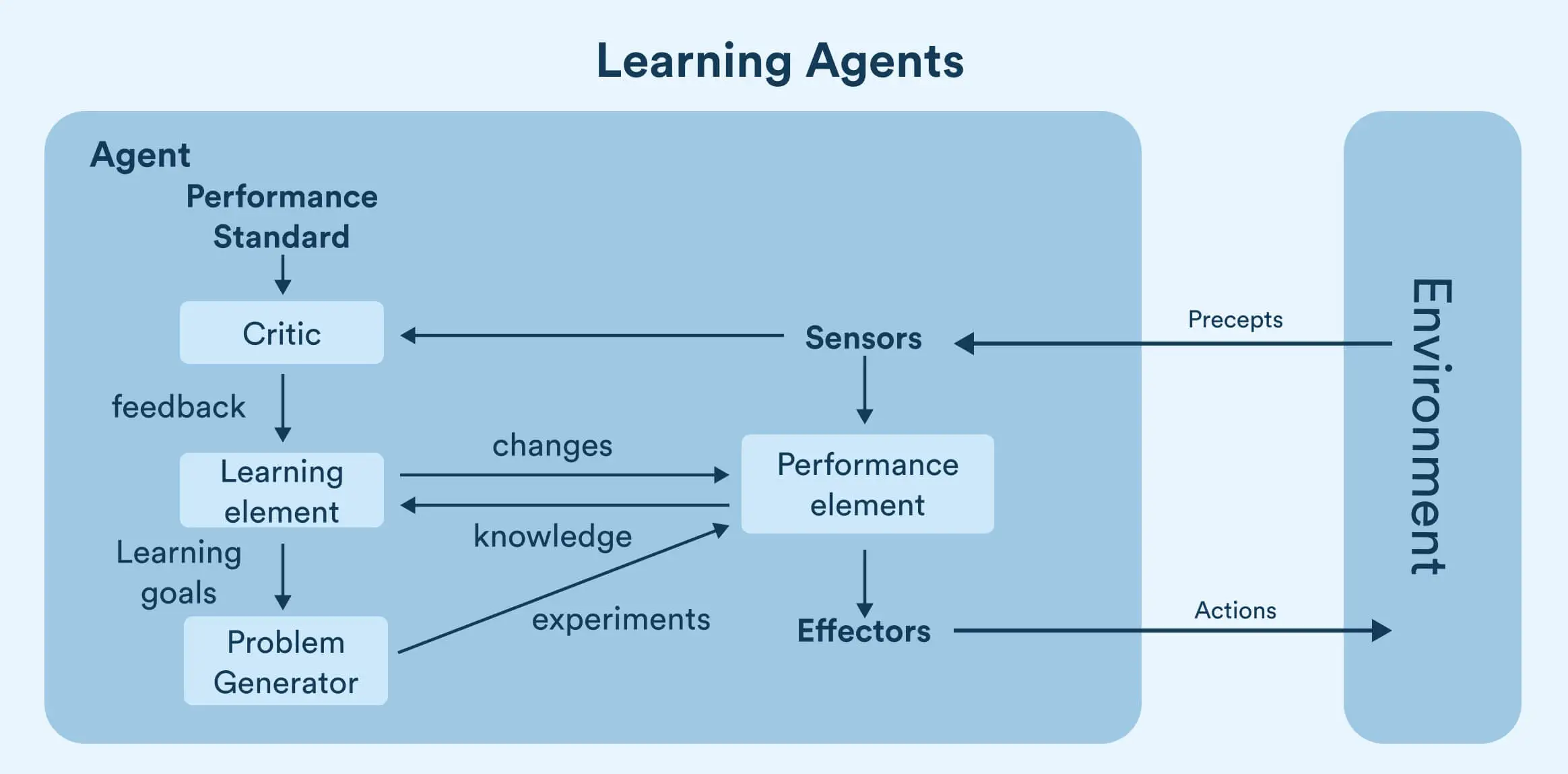

5. Learning Agents

Learning agents represent the most advanced category of AI agents. Instead of relying solely on predefined rules or models, they continuously improve performance by learning from data, feedback, and interaction outcomes.

Key Features

These agents evolve over time, making them ideal for environments that change or scale rapidly.

- Learn from experience and feedback

- Incorporate machine learning models

- Adapt behavior dynamically

- Reduce the need for manual rule updates

Common Use Cases

Learning agents power many of today’s most impactful AI applications.

- Conversational AI and intelligent chatbots

- Fraud detection and anomaly identification

- Personalized marketing and recommendation systems

- Predictive analytics and forecasting

Role in AI Development Services

Learning agents are a cornerstone of modern AI development services, enabling businesses to build self-improving systems that grow smarter as data volume and usage increase.

Choosing the Right AI Agent Architecture

In practice, enterprise-grade AI solutions rarely rely on a single agent type. Experienced AI development services providers often design hybrid architectures, combining multiple agent models to balance performance, scalability, and cost.

Key considerations include:

- Business objectives and decision complexity

- Data availability and feedback mechanisms

- Required level of autonomy and adaptability

Conclusion

AI agents are the building blocks of intelligent systems, ranging from simple rule-based automation to advanced learning-driven intelligence. Understanding their key features and use cases allows organizations to make informed architectural decisions and fully leverage AI development services.

By selecting the right agent types—and implementing them with experienced technical partners—businesses can build scalable AI solutions that deliver long-term strategic value.

Relipa Software

Relipa Co., Ltd. is a Vietnam-based software development company established in April 2016. After two years of growth, our Japanese branch – Relipa Japan – was officially founded in July 2018.

We provide services in MVP development, web and mobile application development, and blockchain solutions. With a team of over 100 professional IT engineers and experienced project managers, Relipa has become a reliable partner for many enterprises and has successfully delivered more than 500 projects for startups and businesses worldwide.