Unlocking The Power of ChatGPT API (GPT-4): A Complete Guide

On March 14th, 2023, OpenAI released the official GPT-4 model. Detailed information is published on the OpenAI blog. If you want to know more detailed information since the release in November last year, you can read it on our blog.

The new model called GPT-4 excels at understanding images and text, which it calls “the latest milestone in its efforts to scale up deep learning.”

GPT-4 will be available on ChatGPT Plus (with usage limits) only for subscribed users from March 15, 2023, and developers can sign up for a waiting list to access the API.

What are ChatGPT API (gpt-4) and Whisper APIs?

ChatGPT API (Model GPT-4)

Model: OpenAI has released an advanced image and text understanding AI model, GPT-4, which it describes as “the latest groundbreaking in its efforts to scale up deep learning.”

GPT-4 will be available via ChatGPT Plus (with usage limits) for paying users of OpenAI from March 15, 2023, and developers will jostle in the queue for accessing the API in OpenAI’s waitlist.

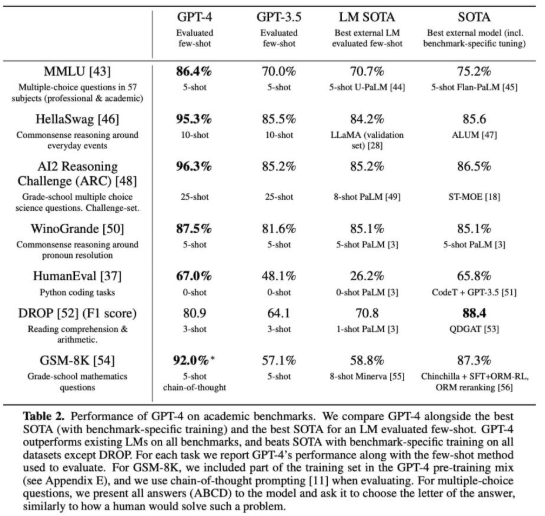

While GPT-3, the predecessor of GPT-3.5, could only accept text, GPT-4 allows both text and image input and has been recognized by various professional and academic benchmarks as demonstrating human-level performance. For example, GPT-4 takes practice tests for the bar exam, scoring in the top 10% of outstanding candidates. By the way, GPT-3.5 was about the bottom 10% score.

OpenAI “iteratively aligns” GPT-4 for 6 months using different in-house testing programs and learning examples from ChatGPT to ensure consistency with facts, maneuverability, and non-deviation from the norm. In terms of maneuverability, OpenAI marked it “the best result ever”. As with past GPT models, GPT-4 was trained using publicly available data, including public web pages and data licensed by OpenAI.

GPT-4-vs GPT-3.5

GPT-4-and-GPT-3.5

Reference: OpenAI

OpenAI worked with Microsoft to develop a “supercomputer” from scratch on the Azure cloud and used it to train GPT-4.

In a blog post announcing GPT-4, OpenAI said, ”In everyday conversation, the difference between GPT-3.5 and GPT-4 may be subtle. GPT-4 is more reliable, creative, and capable of handling more nuanced and ambiguous instructions than GPT-3.5.”

Another interesting feature of GPT-4 is its ability to recognize images and text. GPT-4 is able to understand and interpret relatively complex images with captions. For example, it is possible to identify a Lightning cable adapter from an image of an iPhone being charged.

However, this image understanding ability is not yet at a level that can be adapted to all needs. OpenAI is first testing with a partner called Be My Eyes. Together with Be My Eyes’ new Virtual Volunteer feature, GPT-4 will be able to answer questions about the images it sends. OpenAI explains how it works in its blog:

“The most significant improvement in GPT-4 is the introduction of steerability tools. Specifically, it refers to the new API feature “System Messages” that allows developers to prescribe styles and tasks by writing specific instructions. System messages will also be available in ChatGPT in the future, which are instructional tools that can set the tone and establish boundaries while interacting with the AI.

For example, a system message might instruct GPT-4 to: “You (GPT-4) are a tutor who always responds in a Socratic style. Try to ask the right questions to help students think for themselves, rather than giving them answers. Questions should be tailored to the student’s interests and knowledge, and the problem should be broken down into easier parts and adjusted to the appropriate level for the student.”

However, even with system messages and other upgrades, OpenAI admits that GPT-4 is still far from perfect. GPT-4 can still get the facts wrong and make inference errors. Sometimes too overconfident. For example, GPT-4 describes Elvis Presley as “the actor’s son”, which is clearly false.

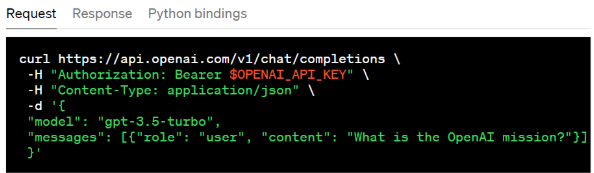

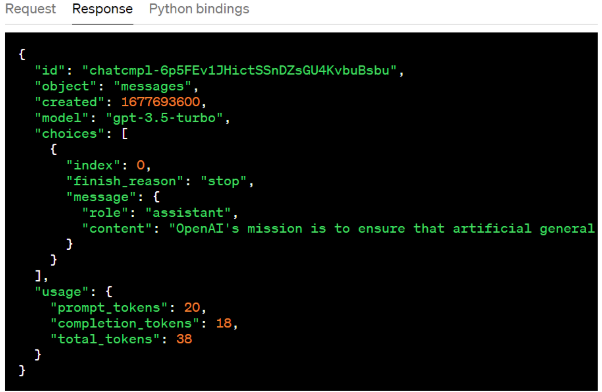

API: Traditionally, the GPT model has used unstructured text. This is represented in the model as a series of “tokens”. The ChatGPT model, on the other hand, consumes a series of messages with metadata instead. (Interestingly, under the hood, the input is still rendered (to the model) as a series of “tokens” that the model consumes. The model uses a new format called Chat Markup Language (“ChatML”).

OpenAI has created a new endpoint for interacting with the ChatGPT model.

OpenAI-API

OpenAI-API

OpenAI-API

Reference: OpenAI

For more information on the ChatGPT API, please refer to the OpenAI chat guide.

“Whisper” API

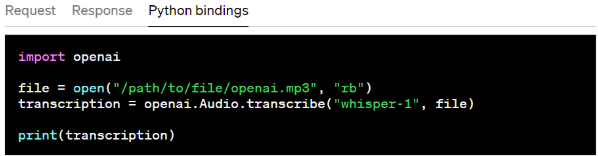

Whisper, a speech-to-text conversion model that OpenAI open-sourced in September 2022, has been paid tribute to by the IT industry. However, there are still some cases that are difficult to implement at this stage. Also, large v2 models are now available through the API. This gives developers convenient on-demand access for $0.006 per minute. In addition, OpenAI’s highly optimized service stack ensures faster performance than other services.

The Whisper API is available in various formats (m4a, mp3, mp4, mpeg, mpga, wav, webm) through transcription (transcription in the source language) or translation (transcription to English) endpoints.

Whisper-API

Whisper-API

Whisper-API

Reference: OpenAI

For more information on the Whisper API, please refer to Speech-to-Text Guide

Read more: 10 Impressive Features and Drawbacks of GPT-4 – A Closer Look at New Evolutions in AI

Advantages of GPT-4 and Whisper APIs

Advantage

The advantages of GPT-4 are:

- Look at an image and understand its content

Input: What can I make with these ingredients?

Reference: OpenAI

Output:

There are many options for what you can make with these ingredients! Some possibilities include:

- Pancakes or waffles

- Crepes

- French toast

- Omelette or frittata

- Quiche

- Custard or pudding

- Cake or cupcakes

- Muffins or bread- Cookies or biscuits

The most striking change in this versatile machine learning system is that it is “multimodal”. This means that multiple information “modalities” can be understood. ChatGPT and GPT-3 were text-only, readable, and writable, but limited (and inadequate for many applications).

However, when given images, GPT-4 processes them very finely to find relevant information. Of course, you can just tell it to describe what’s in the image, but what’s more remarkable is that the understanding is much deeper than that. An example given by OpenAI makes a joke about a ridiculously large iPhone connector image. And when you work with Be My Eyes, that understanding goes even deeper.

Reference: Be My Eyes

For example, in the Be My Eyes video, GPT-4

- Explain the pattern of the dress

- Identify plants

- Explains how to get to a specific piece of equipment in the gym

- Translate labels and provide recipes

- Map interpretation

And many other tasks. If you ask the right questions, you can see that GPT-4 has a better understanding of what’s in the image by taking in the background and context. For example, you can answer whether the dress shown in the image is suitable for an interview.

- Having long-term memory

Large language models are trained on millions of web pages, books, and other text data, but how to remember all of that when you’re actually conversing with a user? There are limits. GPT-3.5 and older versions of ChatGPT have a limit of 4,096 tokens, which is no more than about 8,000 words or roughly 4-5 pages of a book.

GPT-4, on the other hand, has a maximum token count of 32,768, which is 2 to the 15th power, about 64,000 words, or the equivalent of 50 pages in a book. In other words, you can keep up to 50 pages of content in your memory during conversations and text generation. So, for example, ChatGPT can clearly remember what you said 20 pages ahead, or if you’re writing a story or essay, you can refer back to what happened 35 pages ago.

- Supporting multiple languages

Since the world of AI is based in the English-speaking world, data, tests, research papers, etc., are all written in English. However, the power of large language models is useful in any language and should be available in languages other than English.

GPT-4 is built to answer thousands of multiple-choice questions with high accuracy in 26 languages, including Italian, Ukrainian, and Korean. It is best suited for Romance and Germanic languages, but is becoming more versatile for other languages as well.

The results of the above language proficiency tests were highly commendable. This is because the test content was translated from English and was not directly used in multiple languages other than English. However, GPT-4 also performed very well in languages that it had not been specially trained for. Therefore, it can be said that it is easy for people outside of the English-speaking world to become familiar with it, and it has the potential to become a very familiar presence.

The Whisper API is the perfect tool for anyone who needs accurate speech-to-text functionality. Transcribe interviews, create subtitles for your favorite shows, or even develop voice-activated assistants. And it can be used with almost any kind of audio file.

Read more: Maximizing ChatGPT: A Guide to Using and Exploring ChatGPT’s Use Cases

Real-world Use Cases

Duolingo

Duolingo has launched a new subscription tier, “Duolingo Max”, with two new features: “Role Play”, an AI conversation partner, and “Explain my Answer”, which explains the rules when you make a mistake. of GPT-4. “We needed an AI-powered feature that could be deeply integrated into our app and leverage the gamified aspects of Duolingo that learners love,” said Bodge, product manager.

Duolingo engineers set out to use GPT-3 to complement the previous chat feature with some more uncannily human-like ability. “We were very close to completion,” said lead engineer Bill Peterson, but “we weren’t quite at the point where we could confidently integrate complex automated chat functionality.”

Duolingo once tried to chat with learners using simple lines like “order food,” “meet someone for the first time,” or “buy plane tickets.” But what Duolingo needed, Bodge said, was to have conversations with learners in niche contexts, and to have deep, personalized conversations about things like the fun of basketball and the joy of reaching the top of a mountain. It was an ability. In that sense, GPT-4, after learning from enough public data, is now able to produce highly flexible responses, bringing Bodge closer to what he was originally aiming for.

The Duolingo team is very excited about the potential of GPT-4 and believes it will improve learning outcomes by providing a more effective and engaging learning experience. Additionally, Peterson says GPT-4 simplifies the overall engineering process because it’s easy to experiment with.

“We were asked to build a prototype in just one day, which allowed us to go from 0 to 95% very quickly, and only the last 5% to manually fine-tune the data. I was able to finish it so that I could do it.”

Speak

Speak is an AI-powered language learning app that focuses on speaking and builds the best learning pathways. Speak is the fastest-growing English app in South Korea and has already rolled out a new AI-speaking companion product using the Whisper API globally. Whisper’s human-level speech accuracy enables true open-ended conversation practice and highly accurate feedback for language learners of all levels.

How to Use ChatGPT API (GPT-4) and Whisper API

To get access to the GPT-4 API, visit the OpenAI waiting list (this uses the same ChatCompletions API as gpt-3.5-turbo). OpenAI will start inviting a limited number of developers from March 15, 2023, and plans to gradually scale up while balancing demand and capacity. For researchers studying AI’s social impact or AI integrity issues, you can also apply for grant access through the Researcher Access Program.

Once you have access, you can send text-only requests to the GPT-4 model (image input remains in limited alpha). Whenever OpenAI creates a new version, OpenAI automatically updates to the recommended stable model (we call the current version gpt-4-0314 and support it until June 14, 2023).

Get API Key

You need to get an API key and make a request according to the API documentation when using it.

To use the ChatGPT API, you need to get an API key.

- Visit the OpenAI website. If you don’t have an account, go to your OpenAI account sign-up, and if you already have one, log in to get it.

- After logging in go to the Account API Keys section.

- From this screen, click the Create new secret key button to create a new secret key. The private key is displayed only once. Be sure to copy it. If you forget the copy, delete the old key and create a new one.

Do not share your API key with others or expose it in your browser or other client-side code. To ensure the security of your account, OpenAI may automatically delete API keys that are found to be public.

API Endpoints for OpenAI

OpenAI’s API endpoints are URLs that developers can use to send requests and receive responses from OpenAI’s APIs, which provide access to various language models, including GPT-3. The exact endpoint URL may vary depending on the specific API version and model used, but generally follows a similar format including base URL, version number, and endpoint path.

For example, the base URL for OpenAI’s API is typically https://api.openai.com/ and the version number may be included in the URL path, such as v1 for the first version of the API. The endpoint path points to the specific API endpoint to be accessed, such as /completions for requesting text completions from the language model.

Overall, the full API endpoint URL for accessing OpenAI’s API looks like this:

Common parameters required in API requests

Of course, the exact endpoint URL and required parameters may vary depending on the specific use case and API function being accessed.

The required parameters by OpenAI’s API depend on the API endpoint used and the type of request sent. However, there are common parameters that most API requests require, specifically:

- Model: This parameter specifies the name or ID of the language model to use for the generated response. For example, the GPT-3 model can be accessed using the parameter values ’davinci’ or ‘curie’, depending on the specific version of the model being used. As of this writing, the models that support ChatGPT are gpt-4 and gpt-4-0314. Developers using GPT-4 models always get stable models recommended by OpenAI.

- Prompt: This parameter is used as a starting point to provide input text to the language model and generate a response. The prompt text should be formatted according to the requirements of the API endpoint used.

- API_key: This parameter is used to authenticate API requests and must be included in the request header. An API key is a unique identifier provided to developers who have registered with OpenAI and have been approved for API access.

Other parameters depend on the API endpoint used, such as the temperature parameter to control the randomness of the generated text and the max_tokens parameter to set the maximum length of the generated text. It is important to refer to the API documentation for the endpoint you are using to ensure all required parameters are included in your API request.

Setting up a Development Environment for Using OpenAI APIs

To set up a development environment for using OpenAI APIs, you can follow these general steps:

- Sign up for an OpenAI API Key: Before you can use the OpenAI APIs, you need to sign up for an API key. Go to the OpenAI website and follow the instructions to sign up for an API key.

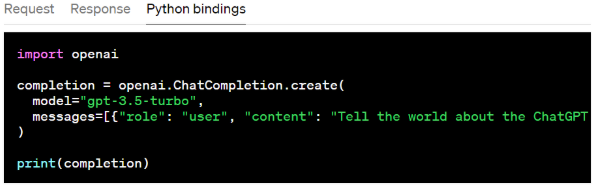

- Choose a Programming Language: Choose a programming language that you are familiar with and that has a client library for the OpenAI API. Some popular programming languages for working with the OpenAI API include Python, JavaScript, Ruby, and Java.

- Install the Client Library: Install the client library for the OpenAI API for your chosen programming language. You can usually install the client library using a package manager, such as pip for Python, npm for JavaScript, or gem for Ruby.

- Create a New Project: Create a new project or open an existing project in your chosen programming language.

- Import the Client Library: Import the OpenAI client library into your project. The exact command or syntax for importing the library will depend on your chosen programming language and the specific client library you are using.

- Set up your API Credentials: Set up your API credentials by storing your API key in a secure location, such as an environment variable or configuration file. Make sure that you do not share your API key with anyone or expose it in your code.

- Make API Calls: Use the OpenAI client library to make API calls to the OpenAI API. Refer to the OpenAI API documentation for information on how to structure API requests and responses.

- Test your Code: Test your code to ensure that it is working as expected. You can use the OpenAI Playground to test your code and explore the OpenAI API functionality.

- Deploy your Code: Deploy your code to a production environment, if necessary.

These are the general steps for setting up a development environment for using OpenAI APIs. The specific steps may vary depending on your chosen programming language and the client library you are using. For example, send a request to the API in Python to generate text with a given temperature and the maximum number of tokens based on the prompt and model provided.

- Get the OpenAI API key

- Install Python and pip: Python and Python packages. Install pip, the installer. Python can be downloaded from the official website and pip is usually included in the installation.

- Install OpenAI API client library: OpenAI provides a Python client library to access the API. You can install the library using pip by running the following command in your terminal or command prompt.

- API key setup: API keys can be set up by defining them as environment variables in your code.

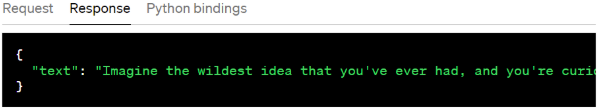

- Testing API requests: After setting up your API key, you can test your API requests by sending a request to the API endpoint with the required parameters. Below is an example of generating text using the GPT-3 model.

Reference: OpenAI

This code sends a request to an API that generates text based on the specified prompt and model, and outputs the generated text. There are various options for API endpoints, and there are restrictions on the models and parameters that can be used. After reading the API documentation, we recommend that you review the available options before submitting your request.

By following the steps above, you can set up a development environment for using OpenAI’s API in Python. APIs can be used to automate a variety of tasks such as text generation, translation, language understanding, sentence summarization, and more.

At Relipa, our experienced and tech-savvy engineers are always up to date with new trends and master the latest technology. If you would like consultancy services to integrate GPT-4 into your business, please do not hesitate to contact us.

ChatGPT API (GPT-4) and Whisper API Pricing

ChatGPT API (GPT-4) and Whisper API Usage Fee

The usage fee for ChatGPT API (GPT-4) has been reduced.

For 8000 tokens (e.g. gpt-4 and gpt-4-0314) the prices are:

- $0.03 per 1k Prompt Tokens

- $0.06 per 1k sample tokens

For models with 32k context length (e.g. gpt-4-32k and gpt-4-32k-0314) the prices are:

- $0.06 per 1k Prompt Tokens

- $0.12 per 1k sample tokens

OpenAI is still improving model quality for long contexts and welcomes feedback on its performance in your use case. Based on capacity, OpenAI is processing requests for the 8K and 32K engines at different rates, so you may be able to access them at different times.

Usage charges for the Whisper large-V2 model are $0.006 per minute. OpenAI CEO Sam Altman previously estimated the computing cost of each chat at a few cents, but the cost has been significantly reduced this time around.

Tokens Do Not Necessarily Match the Number of Words in a Sentence

A token is a unit used in natural language processing (NLP) that represents a group of meaningful sequences of letters or symbols. It includes words, but it also includes punctuation marks, symbols, and other sequences of characters.

Consider the following sentence: “The quick brown fox jumps over the lazy dog.”

- Tokens: In natural language processing, a token is a sequence of characters that represents a single meaningful unit of text. For example, in the sentence above, the tokens would be: “The”, “quick”, “brown”, “fox”, “jumps”, “over”, “the”, “lazy”, and “dog.” Tokens are often used as inputs to machine learning models, where each token is mapped to a numerical representation (such as an integer or a vector) for processing.

- Text: Text refers to the entire body of written or spoken language, including all its constituent tokens. In the context of natural language processing, the text is often analyzed and processed to extract meaningful insights or generate new text. For example, a machine learning model might be trained on a large corpus of text data to learn how to classify or generate new text based on patterns in the input data.

In summary, tokens are individual units of meaning within the text that are often used as inputs to machine learning models, while text refers to the entire body of written or spoken language that is processed and analyzed in natural language processing applications.

Summary

Since the launch of ChatGPT in November 2022, many tech titans have shared details about their AI strategies. Microsoft has released a chatbot inside Bing that it developed in collaboration with OpenAI. Google has announced that it has developed its own bot, Bard. And Facebook’s parent company, Meta, is also working on similar technology development.

ChatGPT’s breakthrough has accelerated the AI race among tech giants, and even within many companies, there is a strong need to respond quickly to new technologies more than ever before.